Melbourne's light rail network, the world's largest and oldest, operates on the Automated Vehicle Monitoring (AVM) system. This original, robust system, developed in the 1980s with custom hardware and legacy operating systems, was extremely reliable but suffered from vulnerabilities and inflexibility due to the outdated technology it is based upon.

The existing AVM was written in C code and was specifically integrated with a proprietary QNX operating system. It utilised QNX networking instead of TCP/IP and was critical to Yarra Trams operations and Melbourne's infrastructure. This application was used daily by control centre operators to manage the tram network and processes key operational performance data which impacted financial bonuses and penalties for Yarra Trams.

However, the QNX OS version was no longer supported, and the hardware required for this version was not commercially available. Previous attempts to port the application to a newer QNX OS had been evaluated, but found unfeasible. These challenges presented significant risks such as:

- Obsolete hardware

- Lack of scalability

- Effectively maintaining operational continuity and efficiency.

Therefore, it became crucial to modernise the system, keeping pace with the advancements in technology, to guarantee the seamless functionality of the network in the long run.

In fact, the project needed to be so seamless that the upgraded system needed to perform exactly the same as the old system, down to every pixel on screen.

The Challenge

The primary aim of the project was to replace the old QNX system with modern Linux servers. Doing this presented multiple challenges, including:

- The consoles operating the frontend user interface for control centre operators were integrated with QNX fleet messaging, a bespoke system incompatible with modern Linux environments.

- Similarly, the display system was designed for a specific Matrox graphics card that was no longer in production.

- The QNX servers were all using native QNX fleet for communication on the network - a feature of QNX where one node could reference another node and execute code on it as if it were on the same machine. This was now incompatible with modern Linux systems.

- All of the code for the systems were written in C, however a lot of the libraries that QNX used were slightly different from the Linux equivalent, or they did not exist. This made porting the C binaries between operating systems difficult.

- The new system needed to be compatible with a CICD rollout system to allow for easy deployment of releases. That differed greatly from the current QNX implementation.

- Given that the Melbourne Tram system operates 24/7, safely deploying the new system necessitated a phased approach, with carefully isolated steps and rollback plans to address any potential issues that could arise.

- The QNX system had to communicate with the RBS (Radio Base Station) network, which relied on outdated software technologies. These five Radio Base Stations, installed throughout the city, were connected to the QNX system, which needed to encode and decode messages between them. This was a challenge as the units ran on very specific timings and were critical to the network operation i.e. an outage in the link between QNX and a radio base station would result in the communication lost to 20% of trams.

Our Approach

Portable took a methodical and comprehensive approach to developing automated testing and provisioning systems, ensuring robust and reliable performance across varied scenarios. Monitoring and reporting were meticulously set up, providing real-time dashboards, comprehensive logging, and automated alerts. This holistic approach was chosen to not only enhance system stability and security but also streamline operational efficiency and support processes.

Python Testing Core AVM C components

All core AVM components existed without any automated testing. For the new systems we created automated tests for most binaries in two different formats:

- Unit tests for individual binaries, which hook into the binaries and test for the expected outcomes. These were done through the Unity Framework for all of the ported user interface binaries, as these had changed dramatically from older versions.

- Python script tests were created to test the core functionality of all of the bash scripts used by AVM. Approximately 25 scripts were utilised by AVM for day-to-day tasks. To ensure their functionality across all scenarios, we developed automated tests using pytest.

Provisioning

The system architecture consists of seven physical production servers and 31 operator workstations, supplemented by four physical test servers. Our development and testing processes were conducted using multi-host virtual environments to ensure scalability and flexibility. The base operating system chosen for this setup is Ubuntu 22.04 LTS, leveraging its long-term support and stability.

To minimise the security risk surface area, we utilised a minimal server installation approach and created a custom installer ISO to streamline initial provisioning and expedite disaster recovery processes. This custom installer automates the configuration of networks and users, as well as disk partitioning, to enhance security from the outset.

Provisioning of the entire system is managed using Ansible. Our Ansible configurations are structured to minimise duplication of common settings, ensuring a more efficient and maintainable setup. The provisioning process includes CIS Level 2 hardening to comply with stringent security and IT policies. Additionally, we developed custom Python Ansible plugins to simplify configuration management, making it easier to maintain and update the system.

The complete system provisioning encompasses all applications, services, and user configurations, ensuring the entire environment is ready for operational use from the moment deployment is completed.

Monitoring

Overview of Tools and Technologies

The project required the use of Azure ARC, Log Analytics, and Application Insights for comprehensive operation monitoring and reporting. These tools enable central logging, custom log data collection, real-time dashboards, and automated alerting to ensure the system's health and performance.

Central Logging

Central logging was implemented using built-in syslog and performance monitoring for Linux systems. Additionally, custom text and JSON log data collection rules were defined for specific operational and application monitoring needs. Custom logs pull data from various sources, including MQTT, Docker, and system, ensuring a holistic view of system activities.

Workbooks and VMInsights

From the built-in and custom logs and metrics, application-specific workbooks are created. These workbooks cover custom parts of the system, providing detailed insights. VMInsights, an out-of-the-box set of tools, covers common operational monitoring aspects, enhancing the overall monitoring capabilities.

Dashboard

The dashboard consolidated widgets from both custom and built-in workbooks, catering to different audiences. A high-level dashboard now provides Operational Control Center administrators with key metrics, demonstrating that the system is fully operational. Technical dashboards offer high-level insights into individual components and their status for technical support staff, while low-level dashboards provide engineers with quick access to key logs and outputs for initial debugging activities.

OpenTelemetry Integration

OpenTelemetry is used to capture real user exceptions, ensuring that the monitoring system can track and respond to user-related issues effectively.

Alerts and Notifications

Log Analytics alerts are utilised to build application-specific automated alerting. Custom dimensions in these alerts provide more detail in the notifications sent to support staff. Logic Apps offer customisation of notifications, while Slack integration ensures real-time notifications to the team's main communication channel. The system will also support integration into the incident management system to automatically generate support tickets from alerts, streamlining the incident response process.

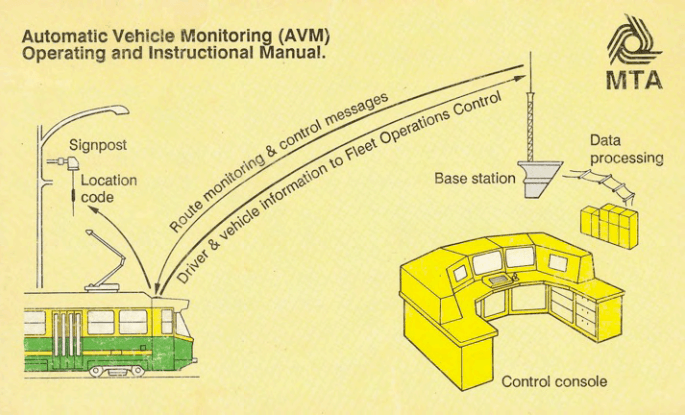

Above is an old school diagram of how AVM works. This was originally found in the MTA manual from 1989 and the same image is used to this day.

Outcomes

The project has succeeded in transforming Melbourne's light rail network by transitioning from an old, niche system to a modern, standard one, which resulted in:

- First and foremost, the new AVM system, from a system interaction perspective, behaves exactly the same as the old QNX system, down to every last pixel on the screen, every radio message and even pitch-perfect matches the sound of every beep emitted by the workstations.

- Infrastructure Modernization: A successful transition from an outdated, niche operating system to a modern, standardised one, paving the way for simplified system management and enhanced software and hardware compatibility.

- Adoption of Contemporary Solutions: The replacement of legacy hardware with modern solutions led to a reduction in maintenance costs and a decreased risk of hardware failures, while simultaneously improving the overall system reliability and performance.

- Implementation of Automated Deployments: The introduction of automated deployments cut down on the time and resources needed to release new features and updates, minimised the potential for human error, and brought about more stable and predictable software releases.

- Real-time System Monitoring: The system now benefits from detailed, real-time monitoring, enabling the proactive identification and swift resolution of any issues. This feature has boosted service availability and performance across Melbourne's light rail network.

- Comprehensive Test Automation: With the introduction of comprehensive test automation, code changes are thoroughly vetted prior to deployment, significantly minimising bugs and enhancing software quality.

- Cost Efficiency: The project also led to considerable cost savings. Automating deployments and tests, modernising hardware, and implementing real-time monitoring eliminated substantial manual efforts, outdated hardware maintenance costs and prevented issues that could lead to service downtime.

Overall, the tram network now benefits from a more efficient, robust, and cost-effective IT landscape capable of addressing contemporary demands and future challenges.

Reflections

"It’s been a great opportunity to extend the life of some valuable and long-lasting software. There were many challenges moving from the highly customised and very old OS to a mostly off-the-shelf modern Linux OS. We often had to find novel solutions that balanced the ongoing supportability of the new system while ensuring the system looked, felt and operated in the same ways as the old system."

Jason Hendry, AVMS Senior Systems Engineer — Portable

Project Team

- Anthony Daff, Business Director

- Nicole Goodfellow, Senior Producer

- Jason Hendry, AVMS Senior Systems Engineer

- Cam Stark, AVMS Systems Engineer

- Philip Giles, AVMS Systems Engineer

- James Zhang, Lead Developer

Download our latest R&D report on the current state of public transport and discover how we can improve the wayfinding experience in Australia today.